Last date modified: 2026-Feb-25

Developing prompt criteria

The prompt criteria are a set of inputs that give aiR for Review the context it needs to understand the matter and evaluate each document. Developing the prompt criteria is a way of training aiR for Review, which is your "reviewer," similar to training a human reviewer. See Best practices for tips and workflow suggestions.

Depending which type of analysis you chose during set up, you will see a different set of tabs in the prompt criteria panel of the dashboard. The Case Summary tab displays for all analysis types.

When you start to write your first prompt criteria, the fields contain grayed-out, italicized helper text that shows examples of what to enter. Use it as a guideline for writing your own entries.

You can also automatically build initial prompt criteria from existing case documents, like review protocols, requests for production or case memos, by using the prompt kickstarter feature. See Using prompt kickstarter for more information.

If needed, you can create project sets within a single aiR for Review project to develop, validate, and apply prompt criteria without having to create new aiR projects for each iteration. See Using project sets for more information.

To learn more about how prompt versioning works and how versions affect the Viewer, see How version controls affect the Viewer.

See these related resources for more information:

- AI Advantage: Aiming for Prompt Perfection? Level up with Relativity aiR for Review

- Workflows for Applying aiR for Review

- aiR for Review example project

- aiR for Review Prompt Writing Best Practices

- Evaluating aiR for Review Prompt Criteria Performance

- Selecting a Prompt Criteria Iteration Sample for aiR for Review

- Six characteristics of Generative AI and their impact on aiR products

- Relativity aiR Ask the Expert: Generative AI Fundamentals in Relativity

Entering prompt criteria

The tabs that appear on the prompt criteria panel depend on the analysis type you selected during set up. Refer to Setting up the project for more information.

- On the aiR for Review dashboard, you can enter data in the prompt criteria tabs using any of the methods below: Grayed-out, italicized helper text displays in the boxes as a guide for your entries.

- Select each tab and manually enter the required information as outlined in the Prompt criteria tabs and fields section.

- Click the Draft with AI button (prompt kickstarter) to upload and use existing documentation, like requests for production or review protocols, to automatically fill in the tabs with draft criteria. See Using prompt kickstarter for more information.

- Use project sets to develop, validate, and apply prompt criteria using a new data source (saved search) without having to create a new aiR project. See Using project sets for more information.

- Click Save after entering data on each tab or after all tabs are completed.

- After you have the desired prompt criteria set, click Start Analysis to analyze documents. For more information on analyzing documents, see Running the analysis.After job analysis starts, tab fields cannot be edited, unless noted otherwise.

- To validate the prompt criteria, see aiR for Review prompt criteria validation and Setting up aiR for Review prompt criteria validation for more information.

- To run multiple project set iterations on different saved searches within the current aiR for Review project, see Creating a new develop project set

Prompt criteria tabs and fields

Use the sections below to enter information in the necessary fields.

All prompt criteria tabs combined must not exceed a total of 15,000 characters. An error message displays if the limit is exceeded.

Case Summary tab

The Case Summary gives the Large Language Model (LLM) the broad context surrounding a matter. It includes an overview of the matter, people and entities involved, and any jargon or terms that are needed to understand the document set.

To ensure optimal results, limit the Case Summary content to 20 or fewer sentences overall, and 20 or fewer entries each of People and Aliases, Noteworthy Organizations, and Noteworthy Terms. Although longer entries can be processed by the system, exceeding these limits may reduce result quality.

This tab appears regardless of the Analysis Type selected during project set up.

Fill out the following:

- Matter Overview—provide a concise overview of the case. Include the names of the plaintiff and defendant, the nature of the dispute, and other important case characteristics.

- People and Aliases—list the names and aliases of key custodians who authored or received the documents. Include their role and any other affiliations.

- Noteworthy Organizations—list the organizations and other relevant entities involved in the case. Highlight any key relationships, entities, or other notable characteristics.

- Noteworthy Terms—list and define any relevant words, phrases, acronyms, jargon, project code names, or slang that might be important to the analysis.

- Additional Context—list any additional information that does not fit the other fields. This section can be left blank.

Depending on which Analysis Type you chose when setting up the project, the remaining tabs will be Relevance, Key Documents, or Issues. Refer to the appropriate tab section below for more information on each one.

For best results when entering information in the Case Summary tab:

- Keep Case Summaries to 20 sentences or fewer. Longer summaries often include excess details, weakening prompt quality and increasing borderline documents. Concise, direct summaries aligned with best practices lead to better results.

-

For People and Aliases, avoid listing every person in the case. The system picks up common information, such as work emails. Instead, focus on including people and aliases a typical person could not easily infer, such as unusual or personal email addresses, and only when those details matter for determining document relevance.

Relevance tab

This tab defines the fields and criteria used for determining if a document is relevant to the case. It appears if you selected Relevance or Relevance and Key Documents as the Analysis Type during project setup.

Fill out the following:

- Relevance Field—select a single-choice field that represents whether a document is relevant or non-relevant. This selection cannot be changed after the first job run.

- Relevant Choice—select the field choice you use to mark a document as relevant. This selection cannot be changed after the first job run.

- Relevance Criteria—summarize the criteria that determine whether a document is relevant. Include:

- Keywords, phrases, legal concepts, parties, entities, and legal claims.

- Any criteria that would make a document non-relevant, such as relating to a project that is not under dispute.

- Issues Field (Optional)—select a single-choice or multi-choice field that represents the issues in the case. This selection cannot be changed after the first job run.

- Choice Criteria—select each of the field Choices one by one to be analyzed. A maximum of 10 choices can be analyzed at a time. To remove a selected choice, click the X in its row. For each choice, write criteria description in the text box listing the criteria that determine whether that issue applies to a document.

- aiR does not make Issue predictions or coding during Relevance review, but you can use this field for reference when writing the Relevance Criteria. For example, you could tell aiR that documents containing any of the defined issues are relevant. If you want aiR to provide issue predictions, you must run another project using Issues as the analysis type on the documents.

- The field choices cannot be changed after the first job run. However, you can still edit the criteria description in the text box.

- If you delete or modify the name of a field Choice used in the project before running an analysis, an error message appears indicating the choices configured for the Issues Field are not valid. To resolve this, return to the Choice Criteria field within the prompt criteria tab and update it as necessary. For further details about deleting or modifying Choices, refer to Choices.

For best results when writing the Relevance Criteria:

- Limit it to 5-10 sentences.

- Do not use a plain number for the Choice Criteria name, such as 12.

- Do not paste in the original request for production (RFP), since those are often too long and complex to give good results. Instead, summarize it and include relevant excerpts.

- Group similar criteria together when you can. For example, if an RFP asks for “emails pertaining to X” and “documents pertaining to X,” write “emails or documents pertaining to X.”

Key Documents tab

This tab defines the fields and criteria used for determining if a document is "hot" or key to the case. It appears if you selected Relevance and Key Documents as the Analysis Type during project setup.

Fill out the following:

- Key Document Field—select a single-choice field that represents whether a document is key to the case. This selection cannot be changed after the first job run.

- Key Document Choice—select the field choice you use to mark a document as key. This selection cannot be changed after the first job run.

- Key Document Criteria—summarize the criteria that determine whether a document is key. For best results, limit the Key Document Criteria to 5-10 sentences. Include:

- Keywords, phrases, legal concepts, parties, entities, and legal claims.

- Any criteria that would exclude a document from being key, such as falling outside a certain date range.

Issues tab

This tab defines the issue fields and criteria used for determining whether a document relates to the set of topics or issues specified. It appears if you selected Issues as the Analysis Type during project setup.

Fill out the following:

- Field—select a multi-choice field that represents the issues in the case.

- Choice Criteria—select each of the field Choices one by one to be analyzed. A maximum of 10 choices can be analyzed at a time. To remove a selected choice, click the X in its row. For each choice, write criteria description in the text box listing the criteria that determine whether that issue applies to a document, or this information can be populated using prompt kickstarter.

- The field choices cannot be changed after the first job run. However, you can still edit the criteria description in the text box.

- If you delete or modify the name of a field Choice used in the project before running an analysis, an error message appears indicating the choices configured for the Issues Field are not valid. To resolve this, return to the Choice Criteria field within the prompt criteria tab and update it as necessary. For further details about deleting or modifying Choices, refer to Choices.

For best results when writing the Choice Criteria:

- Include keywords, phrases, legal concepts, parties, entities, and legal claims.

- Include any criteria that would exclude a document from relating to that issue, such as falling outside a certain date range.

- Be clear and specific; avoid ambiguity.

- Limit the criteria description for each choice to 5-10 sentences.

- Each choice must have its own criteria. If a choice has no criteria, either fill it in or delete the choice.

- Do not use a plain number for the Choice Criteria name, such as 12.

Using prompt kickstarter

aiR for Review's prompt kickstarter (Draft with AI option) enables you to automatically draft initial prompt criteria for a project from existing case documents, such as review protocols, requests for production, complaints, or other foundational case documents, instead of doing so by scratch. By uploading up to five documents (with a total character count of up to 150,000), aiR for Review will analyze them to complete the relevant initial prompt criteria. This enables you to start a new project with minimal effort. See Job capacity, size limitations, and speed for more information on document and prompt limits.

You can repeat this process as needed to refine the prompt criteria before starting the first job analysis. After the analysis begins, the Draft with AI option is disabled.

- There are no additional charges to use prompt kickstarter.

- To use prompt kickstarter for Issues projects, be sure to create an Issues field in the workspace beforehand starting the project.

To use prompt kickstarter:

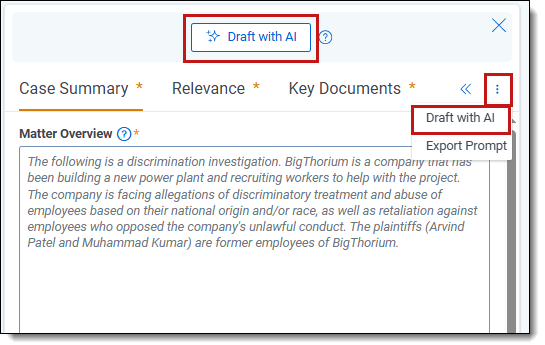

- On the prompt criteria panel, click the Draft with AI button. You can also click the three vertical ellipses More (

) icon and select Draft with AI.

) icon and select Draft with AI.

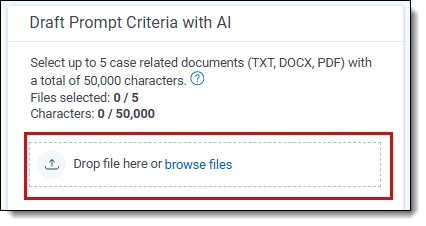

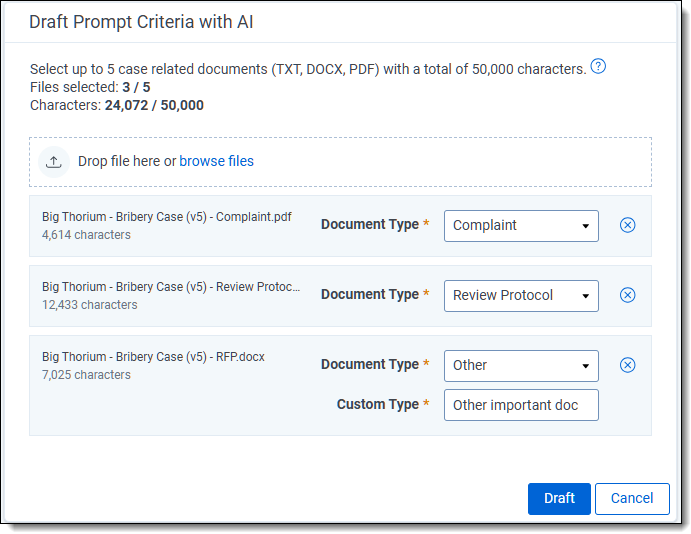

- Upload content using any of the methods below for AI to draft prompt criteria. The total number of selected files and uploaded characters counters are displayed at the top and update automatically as changes occur.The maximum number of documents is five, with a combined total character limit of 150,000.

- Drop file here or browse files—drag or upload document files. Supported file formats are .txt, .docx, and .pdf.

- Add text manually—click to manually add text or to copy and paste from another source. You can also use this option to include additional case context for the AI assistant to use.

- Select the Document Type for each uploaded file. Options include: Review Protocol, Request for Production, General Case Memo, Complaint, Key Document, and Other. If you select Other, enter a document type description in the Custom Type box. The number of characters in each file appears below the filename to help keep track of the 150,000 character limit.

- Repeat steps 2-3 to upload more files.

- To delete a file from the list, click its corresponding circle X icon.

- To use prompt kickstarter to automatically draft Issue descriptions for your prompt criteria, do the following:

- For Issues projects, use the Select Issues Field drop-down list below the documents list. For Relevance or Relevance and Key projects, enable the Include Issues in Relevance Criteria toggle below the documents list.Case issues will be included as part of the criteria for a Relevance analysis. However, documents will not be labeled with issue choices as part of a Relevance analysis.

- Set up the Issues field by selecting a single-choice or multi-choice field to indicate the issues involved in the case. Each option should identify a separate issue for which prompt kickstarter will generate a description. See Issues tab for more details on the fields. For best results drafting Issue descriptions:

- Name each issue choice concisely and descriptively to help the AI model understand and generate accurate outputs. For example, using the Choice Criteria name, Anti-bribery policy in Acme Company internal communication will help the AI generate a description such as Documents, policies, or internal communications describing or outlining Acme Company's corporate policies related to ant-bribery or anti-corruption with public officials.

- Correct any misspellings.

- Do not use a plain number for the Choice Criteria name, such as 12.

- Upload only relevant documents, and if necessary, include additional case context using the Add text manually option. The AI assistant uses contextual cues from the uploaded files. Providing unrelated or contradictory materials may degrade the quality of the generated output.

If the AI assistant is unable to find relevant information in the uploaded documents for at least one of the selected issues, a message appears. You can try to manually enter the choice criteria description. Alternatively, you can run Draft with AI again after updating the choice name or uploading more content-rich documents.

- For Issues projects, use the Select Issues Field drop-down list below the documents list. For Relevance or Relevance and Key projects, enable the Include Issues in Relevance Criteria toggle below the documents list.

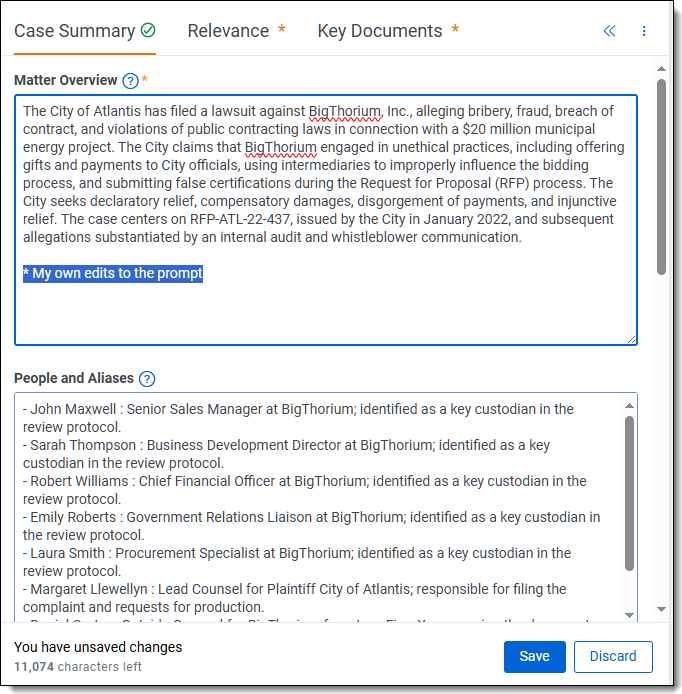

- Click Draft to begin automatically drafting prompt criteria from the uploaded documents or the manually entered content. Typically results display within 1-2 minutes.You cannot run document analysis during the drafting process.

- Review and edit the draft prompt criteria entered in the available tabs. Click Save to keep the changes or Discard to delete them.

- Repeat these steps with other documents and information as needed until the desired set of prompt criteria is achieved.

- Review the available tabs and enter any required information on each.

- Once the desired prompt criteria is set, click Start Analysis to analyze documents. For more information on analyzing documents, see Running the analysisThe Draft with AI option is no longer available after the analysis process begins.

Editing and collaboration

If two users edit the same prompt criteria version simultaneously, the most recent save will overwrite previous changes. For this reason, it can be beneficial to have only one user edit a project's prompt criteria at any given time. Defining distinct roles for users when updating prompt criteria may help streamline the process.

To facilitate team collaboration outside of RelativityOne, use the Export option. It exports the contents of the currently displayed prompt criteria to an MS Word file. For more information, see Exporting prompt criteria.

How prompt criteria versioning works

Each aiR for Review project comes with automatic versioning controls so that you can compare results from running different versions of the prompt criteria. Each analysis job that uses a unique set of prompt criteria counts as a new version.

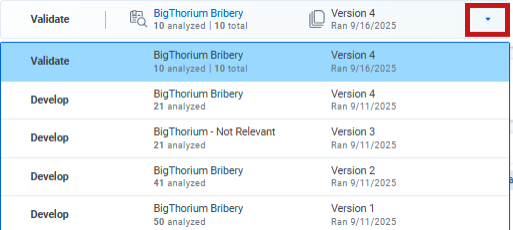

When you run aiR for Review analysis, the initial prompt criteria are saved as Version 1. Edits to the criteria create Version 2, which you can repeatedly modify until you finalize by running the analysis again to see the results. Subsequent edits follow the same pattern, creating new versions that finalize with each analysis run.

To see dashboard results from a earlier version, click the down arrow next to the version name in the project details strip. From there, select the version you want to see.

How version controls affect the Viewer

When you select a prompt criteria version from the dashboard, this also changes the version results you see when you click on individual documents from the dashboard. For example, if you are viewing results from Version 2, clicking on the Control Number for a document brings you to the Viewer with the results and citations from Version 2. If you select Version 1 on the dashboard, clicking the Control Number for that document brings you to the Viewer with results and citations from Version 1.

When you access the Viewer from other parts of Relativity, it defaults to showing the aiR for Review results from the most recent version of the prompt criteria. However, you can change which results appear by using the linking controls on the aiR for Review Jobs tab. For more information, see Managing aiR for Review jobs.