Last date modified: 2025-Oct-28

Setting up aiR for Review prompt criteria validation

After developing the prompt criteria, you can run it through the validation process to gather metrics to ensure they are effective and defensible before using them on a larger data set. Begin by configuring the validation sample settings in aiR for Review. Based on these parameters, aiR will pull the random sample and create a Review Center queue for their evaluation. After humans review and code the documents in Review Center, you can return to aiR for Review to run the accepted prompt criteria on the larger document set.

This general workflow involves:

1) Setting up the validation sample by choosing the sample size, desired margin of error for the validation statistics, and other settings.

2) Running aiR for Review on the sample to receive relevance predictions.

3) Going to Review Center to begin the human review and coding of the validation sample.

4) Returning to aiR for Review to run the accepted prompt criteria on the larger document set if desired.

For information on coding documents and viewing statistics in Review Center, see:

Follow the steps below to use aiR for Review for prompt criteria validation:

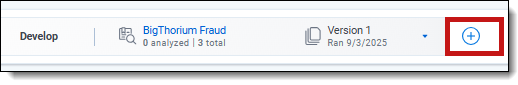

- Choose the desired project set version number to validate.

- Click the project set + sign.

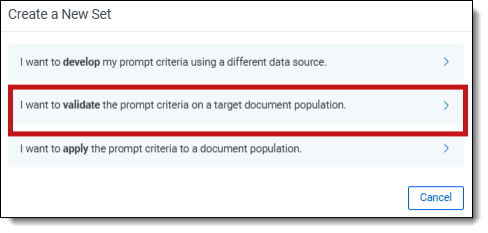

- Click I want to validate the prompt criteria to a document population.

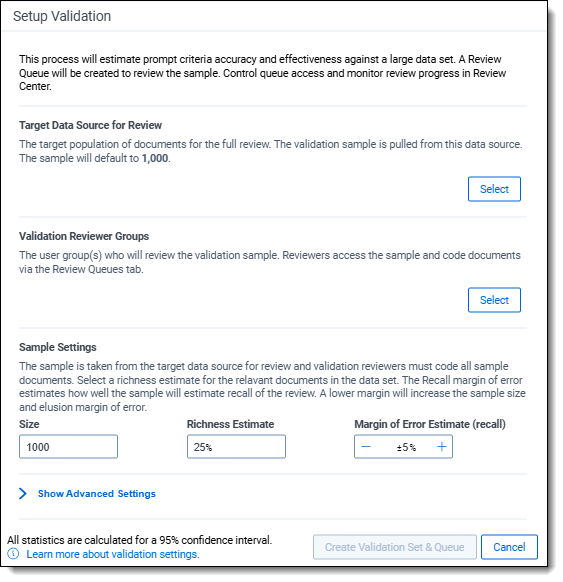

- Fill out the Validation Settings fields to determine the validation sample. For more information on the fields, see Validation Settings fields.

- Click Create Validation Set & Queue. A validation sample set is generated in aiR for Review, and a corresponding queue is established in Review Center for the prompt validation. The project set state transitions from Develop to Validate. For more information on Review Center queues, see Reviewing documents using Review Center.

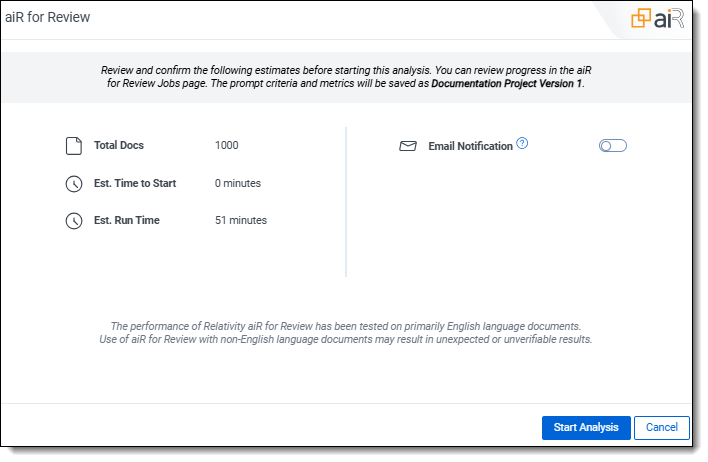

- Review the confirmation summary modal and provide email addresses, if needed, then click Start Analysis.The aiR for Review predictions appear in the Validate Metrics tab. The banner lets you know that a validation queue has been created in Review Center to facilitate review of the validation set there. The Analyze button at the top changes to Active Validation Review to let you know this project set is now in an active validation review process.

- Click the Go to Review Center button or the View in Review Center link to begin the human review and coding of the validation sample in Review Center.

- The Review Center application opens to the active queue. Refer to Prompt Criteria validation in Review Center for the steps to follow in Review Center.

In Review Center, human reviewers manually code the sample set of documents. The Review Center dashboard generates statistics comparing the human coding to aiR’s predictions.

After reviewers have finished coding all the documents in the sample, you can evaluate the Review Center results against the ones in aiR for Review and decide whether to accept or reject them. Refer to Applying the validated results or developing different prompt criteria for more information.

Applying the validated results or developing different prompt criteria

The following outlines the next steps in aiR for Review, depending on whether the validation results were accepted or rejected in Review Center.

If accepted:

- A green check mark displays next to the project set version number in aiR for Review, and it's labeled "Validation Accepted."

- To apply the validated prompt criteria on the larger document set, either click the Run Target Population button or click the project set + sign and select I want to apply the prompt criteria to the target document population. Then go through the process of reviewing the confirmation summary and clicking Start Analysis. For details on running an analysis and project sets, refer to Running the analysis and Using project sets. If the data source (saved search) exceeds the maximum of 250,000 documents, it is necessary to split the data into multiple saved searches and apply each one separately to prevent errors.

- After running the accepted prompt criteria version on the target population in aiR for Review, a green check mark and "Review Complete" notification appear next to the project set. No additional validations can be run on the project after validation is accepted.

If rejected:

- A red X displays next to the version number.

- Click the + sign to create a new project set and repeat the prompt criteria development and validation process again.

Validation Settings fields

Enter information into the following fields:

- Target Data Source for Review—select the source from which documents are selected for the validation sample. Be sure the source is the entire set of documents that aiR for Review will eventually analyze to ensure that the sample is representative of the whole review project, regardless of maximum job size. Documents are randomly chosen from this group and may include those used during the prompt criteria development process.

- Validation Reviewer Groups—select the user groups who will review the validation sample. They will access the sample set and code the documents using the Review Center queue tab. We recommend using experienced reviewers for the validation. It can also be helpful if the reviewers were not involved in the prompt criteria creation and iteration process.

- Sample Settings—these settings determine the characteristics of the sample needed to achieve desired validation results.

- Size—enter the number of documents for the validation sample. The default is set to 1000.

- Richness Estimate—enter the estimated percentage of relevant documents in the target data source used to determine sample size and confidence levels. A default value of 25% is commonly used after data culling for production reviews. A lower value will increase the recommended sample size for a given margin of error.

- Margin of Error Estimate (Recall)—click the plus and minus symbols to adjust the desired margin of error for the Recall statistic. The default of 5% MoE is a widely accepted threshold for defensible validation and recall calculations.

Advanced Settings

- Treat Borderlines as Relevant—the toggle is enabled by default. When enabled, documents with a score of 2 (Borderline) are classified as Relevant in the validation results. When the toggle is disabled, Borderline documents are classified as Not Relevant.

- Display aiR Results in Viewer—the toggle is enabled by default. When enabled, reviewers will see aiR for Review’s predictions in the Viewer while reviewing the validation sample. While seeing these decisions can help spot true positives, it may also bias reviewers towards agreeing with the AI-generated predictions. You can disable the toggle to hide predictions.

- Email Notification Recipients—enter email addresses, separated by semicolons, for individuals to be notified when manual queue preparation finishes, a queue becomes empty, or an error occurs during queue population.