Last date modified: 2026-Feb-10

aiR for Review

aiR for Review harnesses the power of large language models (LLM) to review documents extracted text. It uses generative artificial intelligence (AI) to simulate and accelerate the actions of a human reviewer by finding and describing relevant documents according to the review instructions (prompt criteria) that you provide. The process identifies the documents, describes why they are relevant using natural language, and demonstrates relevance using citations from the document.

A few benefits of application include:

- Highly efficient, low-cost document analysis

- Quick discovery of important issues, relevant documents, and key documents to the case based on prompt criteria

- Trustworthy predictions, explanations, and citations

- Consistent, cohesive analysis across all documents

Below are some common use cases for it:

- Beginning the review process—prioritize the most important documents to give to reviewers.

- First-pass review—determine what you need to produce and discover essential insights.

- Gaining early case insights—learn more about your matter right from the start.

- Internal investigations—find documents and insights that help you understand the story hidden in your data.

- Analyzing productions from other parties—reduce the effort to find important material and get it into the hands of decision makers.

- Quality control for traditional review—compare aiR for Review's coding predictions to decisions made by reviewers to accelerate QC and improve results.

See these related resources for more information:

- aiR for Review Validation Concepts and Definitions (White paper)

- AI Advantage: Aiming for Prompt Perfection? Level up with Relativity aiR for Review

- A Focus on Security and Privacy in Relativity’s Approach to Generative AI

- Workflows for Applying aiR for Review

- aiR for Review example project

- aiR for Review Prompt Writing Best Practices

- Evaluating aiR for Review Prompt Criteria Performance

- Selecting a Prompt Criteria Iteration Sample for aiR for Review

aiR for Review process overview

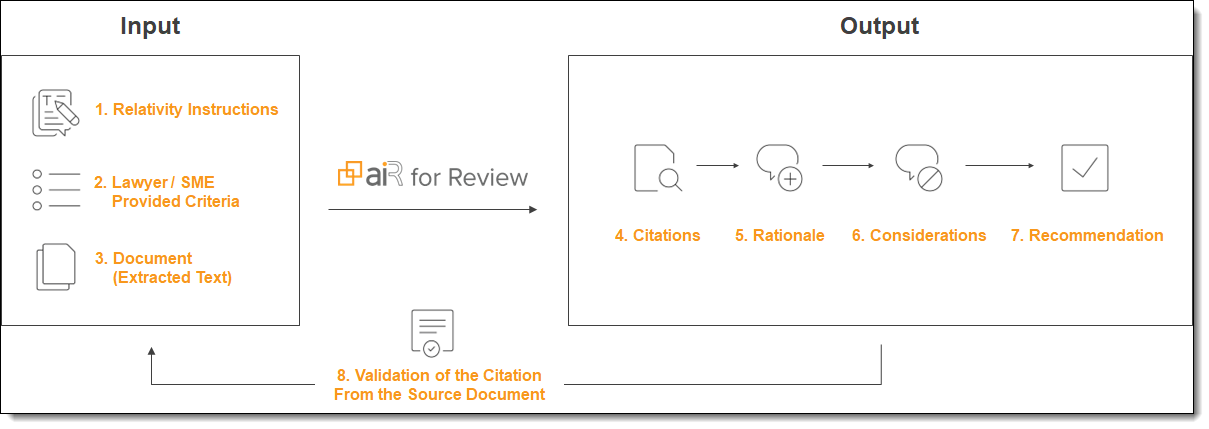

The aiR for Review process uses Relativity instructions, SME-developed prompt criteria, and extracted document text as input. It processes this data to produce document citations with rationale, considerations on possible prediction errors, and its recommendation, such as relevant, not relevant, or borderline. aiR then verifies up to five citations per document to ensure they are accurate and not the result of hallucinations.

aiR for Review phases

The workflow is similar to training a human reviewer: explain the case and its relevance criteria, hand over the documents, and check the results. If the application misunderstood any part of the prompt criteria, simply explain that part in more detail, then try again.

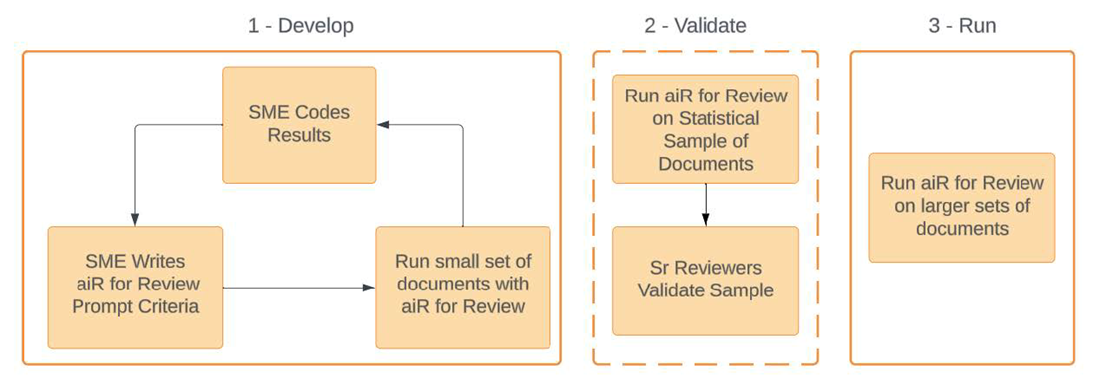

The workflow has three phases:

- Develop—you write and iterate on the prompt criteria (review instructions) and test on a small document set until aiR’s recommendations align sufficiently with expected relevance and issue classifications.

- Validate—run the prompt criteria on a slightly larger set of documents and compare to results from reviewers.

- Apply—use the verified prompt criteria on larger sets of documents or the full set.

Within RelativityOne, the main steps are:

- Select the documents to review. See Choosing the data source for more information.

- Create an aiR for Review project. See Creating an aiR for Review project for more information.

- Write and submit the review instructions, collectively called prompt criteria. See Developing prompt criteria for more information.

- Review the results (citations, rationale, considerations, recommendation). See Analyzing aiR for Review results for more information.

When setting up the first analysis, we recommend running it on a sample set of documents that was already coded by human reviewers. If the resulting predictions are different from the human coding, revise the prompt criteria and try again. This could include rewriting unclear instructions, defining an acronym or a code word, or adding more detail to an issue definition.

For additional workflow help and examples, see Workflows for Applying aiR for Review on the Community site.

Analysis review types

aiR for Review offers the following analysis types, each suited to a specific review or investigation phase.

| Analysis Type | Description |

|---|---|

| Relevance | Use for relevance reviews to find documents that are relevant to a case or situation that you describe, such as documents responsive to a production request. |

| Key Documents | Use to find key documents that are "hot" or important to a case or investigation, such as those that might be critical or embarrassing to one party or another. |

| Issues | Use for issues reviews to find documents that include content that falls under specific categories or legal issues that are important to the case. For example, you might use this to check whether documents involve coercion, retaliation, or a combination of both. |

How it works

aiR for Review's analysis is powered by Azure OpenAI's GPT-4 Omni large language model. The LLM is designed to understand and generate human language. It is trained on billions of documents from open datasets and the web.

When you submit prompt criteria and a set of documents to aiR for Review, Relativity sends the first document to Azure OpenAI and asks it to review the document according to the prompt criteria. After Azure OpenAI returns its results, Relativity sends the next document. The LLM reviews each document independently, and it does not learn from previous documents. Unlike Review Center, which makes its predictions based on learning from the document set, the LLM makes its predictions based on the prompt criteria and its built-in training.

Azure OpenAI does not retain any data from the documents being analyzed. Data you submit for processing by Azure OpenAI is not retained beyond your organization’s instance, nor is it used to train any other generative AI models from Relativity, Microsoft, or any other third party. For more information, see the white paper A Focus on Security and Privacy in Relativity’s Approach to Generative AI.

For more information on using generative AI for document review, we recommend:

- Relativity Webinar - AI Advantage: How to Accelerate Review with Generative AI

- MIT's Generative AI for Law resources

- The State Bar of California's drafted recommendations for the use of generative AI

Understanding documents and billing

For billing purposes, a document unit is a single document. The initial pre-run estimate may be higher than the actual units billed because of canceled jobs or document errors. To find the actual document units that are billed, see Cost Explorer .

A document will be billed each time it runs through aiR for Review, regardless of whether that document ran before.

Customer may not consolidate documents or otherwise take steps to circumvent the aiR for Review Document Unit limits, including for the purpose of reducing the Customer's costs. If Customer takes such action, Customer may be subject to additional charges and other corrective measures as deemed appropriate by Relativity.

Deployment and processing geographies

When using Relativity's AI technology, customer data may be processed outside of your designated geographic region. For more information, see Deployment and processing geographies.

Language support

The underlying LLM used by aiR for Review has been evaluated for use with 83 languages. While aiR for Review itself has been primarily tested on English-language documents, unofficial testing with non-English datasets shows encouraging results.

If you use the application with non-English data sets, we recommend the following:

- Rigorously follow best practices for writing. For more information, see Best practices.

- Iterate on the prompt criteria. For more information, see Revising the prompt criteria.

- Analyze the extracted text as-is. You do not need to translate it into English.

- When possible, write the prompt criteria in the same language as the documents being analyzed. This should also be the subject matter expert's native language. If that is not possible, write the prompt criteria in English.

When reviewing analysis results, all citations remain in the language of their respective source documents. By default, rationales and considerations are provided in English. To request rationales and considerations in another language, include this sentence within the Additional Context field of your prompt criteria: Write rationales and considerations in <Language>. While this approach has been effective in many instances, the LLM may not consistently generate output in the specified language.

For the study used to evaluate Azure OpenAI's GPT-4 model across languages, see MEGAVERSE: Benchmarking Large Language Models Across Languages, Modalities, Models and Tasks on the arXiv website.

Analyzing emojis

aiR for Review has not been specifically tested for analyzing emojis. However, the underlying LLM does understand Unicode emojis. It also understands other formats that could normally be understood by a human reviewer. For example, an emoji that is extracted to text as :smile: would be understood as smiling.