Last date modified: 2026-Feb-05

aiR for Review prompt criteria validation

Using aiR for Review and Review Center in tandem, the prompt criteria validation process gathers statistical metrics to check whether the prompt criteria are effective and defensible before using them on a larger data set. You can set up a smaller document sample, oversee reviewers, compare aiR's relevance predictions to actual coding results, and iterate on prompt criteria as needed.

This functionality is currently only enabled for the Relevance and Relevance & Key analysis types. See Choosing an analysis type for descriptions of these analysis types.

See these related resources for more information:

- Setting up aiR for Review prompt criteria validation

- Prompt Criteria validation in Review Center

- Prompt Criteria validation statistics

- aiR for Review Validation Concepts and Definitions (White paper)

- AI Advantage: Aiming for Prompt Perfection? Level up with Relativity aiR for Review

- Workflows for Applying aiR for Review

- aiR for Review example project

- aiR for Review Prompt Writing Best Practices

- Evaluating aiR for Review Prompt Criteria Performance

- Selecting a Prompt Criteria Iteration Sample for aiR for Review

Prerequisite

To run validation with aiR for Review, the Review Center application must be installed, and users must have the appropriate permissions to each application. See aiR for Review permissions and

Benefits of validation

Using aiR for Review validation helps streamline document review by improving accuracy, reducing rework, boosting collaboration, and ensuring consistent results through AI validation and performance metrics.

The following points highlight key benefits of implementing robust validation and collaboration strategies with aiR for Review.

- Demonstrate defensibility with measurable evidence: Provide concrete evidence of aiR for Review's accuracy by calculating precision, recall, and elusion directly in RelativityOne.

- Prevent costly rework: Avoid rework and identify early on if your prompt criteria should be adjusted before it is used at scale.

- Enhance collaboration between humans and AI: Work in sync with aiR for Review by pinpointing where AI predictions diverge from those of SMEs and adjusting prompt criteria as needed.

- Ensure consistency and foster trust: Build confidence with clients and stakeholders. Validation ensures that aiR for Review’s decision outcomes remain consistent, reducing worries about unpredictability.

How validation fits into aiR for Review

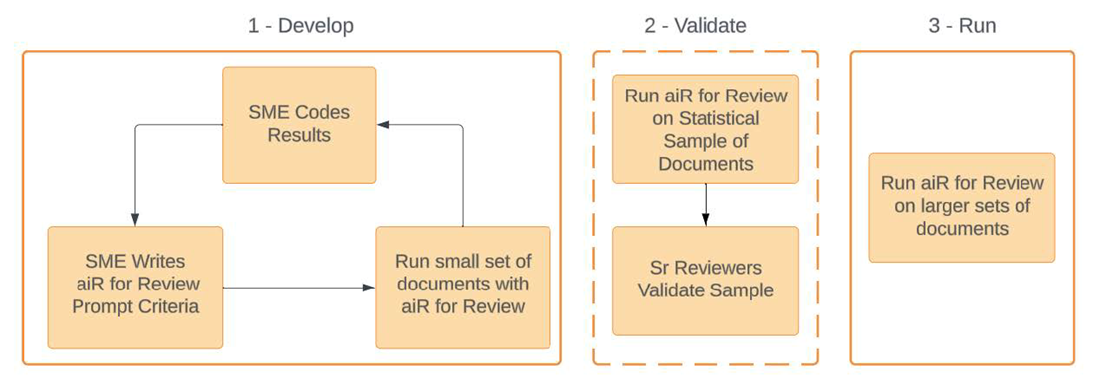

aiR for Review leverages the prompt criteria across a three-phased workflow:

- Develop—user writes and iterates on the prompt criteria and tests on a small document set until aiR’s recommendations align sufficiently with expected relevance and issue classifications. See Developing prompt criteria for more information.

- Validate—user leverages the integration between aiR for Review and Review Center to compare results and validate the prompt criteria. See Setting up aiR for Review prompt criteria validation for more information.

- Apply—user applies the verified prompt criteria on much larger sets of documents. See Applying the validated results or developing different prompt criteria for more information.

The prompt criteria validation process covers phase 2, Validate.

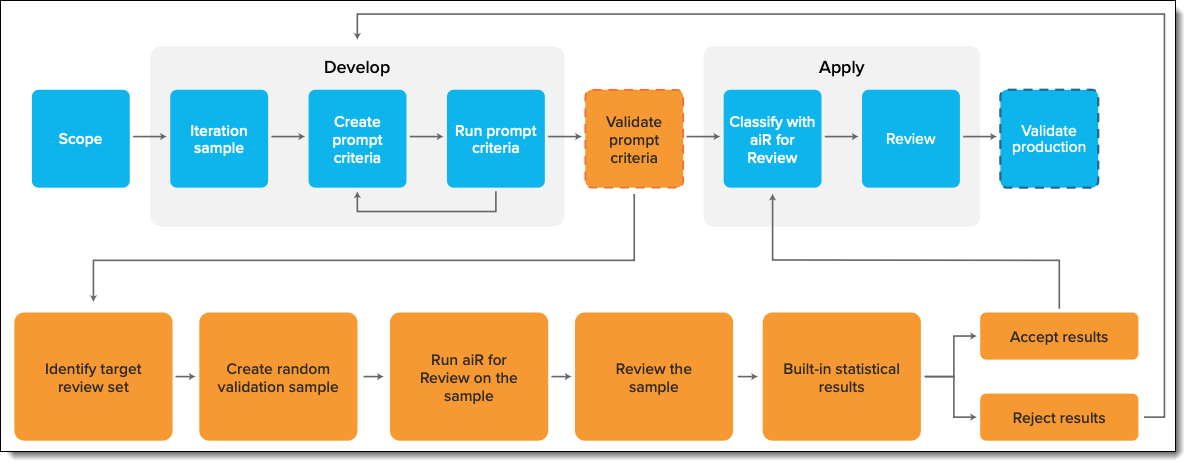

High-level prompt criteria validation workflow

This diagram details the steps (in orange) for prompt criteria validation, which you can perform once the develop phase is complete.

The Validate and Apply phases involve several steps that cross between aiR for Review and Review Center:

| Process Flow | Application Used |

|---|---|

|

1. Identify target review set & choose validation sample settings.

2. Run aiR for Review on the sample to receive predictions.

|

aiR for Review |

|

3. Manually review and code the documents in the validation sample.

4. Evaluate built-in statistical results of the validation.

5. Accept or reject the validation results.

|

Review Center |

6. Optionally apply or improve the prompt criteria.

|

aiR for Review |