Last date modified: 2026-Feb-27

Prompt Criteria validation statistics

aiR for Review Prompt Criteria validation provides several metrics for evaluating your Prompt Criteria. Together, these metrics can help you determine whether the Prompt Criteria will work as expected across the full data set.

Because Prompt Criteria validation is checking the effectiveness of the Prompt Criteria, rather than checking completeness of a late-stage review, these statistics are calculated slightly differently than standard Review Center validation statistics. For the standard Review Center metrics, see Review validation statistics.

For more details on the differences between Prompt Criteria validation statistics and standard Review Center statistics, see How Prompt Criteria validation differs from other validation types.

See these related pages:

- aiR for Review prompt criteria validation

- Setting up aiR for Review prompt criteria validation

- Prompt Criteria validation in Review Center

Defining the validation statistics

Prompt Criteria validation centers on the following statistics. For all of these, it uses a 95% confidence interval:

- Elusion rate—the percentage of documents that aiR predicted as non-relevant, but that were actually relevant.

- Precision—the percentage of documents that aiR predicted as relevant that were truly relevant.

- Recall—the percentage of truly relevant documents that were found using the current Prompt Criteria.

- Richness—the percentage of relevant documents across the entire document set.

- Error rate—the percentage of documents that received errors in aiR for Review.

In everyday terms, you can think of these as:

- Elusion rate: "How much of what we’re leaving behind is relevant?"

- Precision: "How much junk is mixed in with what we think is relevant?"

- Recall: "How much of the relevant stuff can we find?”

- Richness: "How much of the overall document set is relevant?"

- Error rate: "How many documents aren't being read at all?"

For each of these metrics, the validation queue assumes that you trust the human coding decisions over aiR's predictions. It does not second-guess human decisions.

Validation does not check for human error. We recommend that you conduct your own quality checks to make sure reviewers are coding consistently.

How documents are categorized for calculations

aiR for Review tracks one field that represents whether a document is relevant or non-relevant, with only one choice for Relevant. Any other choices available on that field are considered non-relevant.

When you validate Prompt Criteria, Review Center uses that field to categorize coding decisions:

- Relevant—the reviewer selected the relevant choice.

- Non-relevant—the reviewer selected a different choice.

- Skipped—the reviewer skipped the document.

aiR for Review's relevance predictions have a similar set of possible values:

- Positive—The document's score is greater than or equal to the cutoff.

- Negative—The document's score is at least zero, but less than the cutoff.

- Error—The document's score is -1, meaning that aiR for Review could not process the document.

At the end of validation, a slider appears in the Validation Stats area to adjust the cutoff score. The cutoff you choose determines which scores are considered positive. For example, if you set the cutoff score at 2 (Borderline Relevant), all document scores of 2, 3, or 4 are considered positive predictions. If you set the cutoff score to 3 (Relevant), only scores of 3 or 4 are considered positive. For more information, see Choosing a cutoff score.

Variables used in the metric calculations

Based on the possible combinations of coding decisions and relevance predictions, Review Center uses the following categories when calculating statistics. Each of these categories, except for Skipped, is represented by a variable in the statistical equations.

| Document Category | Variable for Equations | Coding Decision | aiR Prediction |

|---|---|---|---|

|

Error |

E |

(any) |

error |

|

Skipped |

(N/A) |

skipped |

(any) |

|

True Positive |

TP |

relevant |

positive |

|

False Positive |

FP |

non-relevant |

positive |

|

False Negative |

FN |

relevant |

negative |

|

True Negative |

TN |

non-relevant |

negative |

Different regions and industries may use "relevant," "responsive," or "positive" to refer to documents that directly relate to a case or project. For the purposes of these calculations, the terms are used interchangeably.

How validation handles skipped documents

We strongly recommend coding every document in the validation queue. Skipping documents reduces the accuracy of the validation statistics. The Prompt Criteria validation statistics ignore skipped documents and do not count them in the final metrics.

Prompt Criteria validation metric calculations

When you validate a set of Prompt Criteria, each metric is calculated as follows.

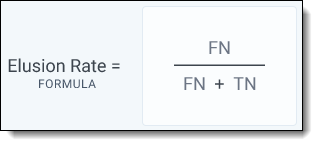

Elusion rate

This is the percentage of documents that aiR predicted as non-relevant, but that are actually relevant.

Elusion = (False negatives) / (False negatives + true negatives)

The elusion rate gives an estimate of how many relevant documents would be missed if the current Prompt Criteria were used across the whole document set.

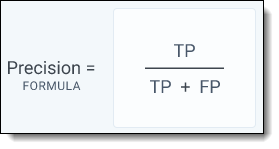

Precision

This is the percentage of documents that aiR predicted as relevant that were truly relevant.

Precision = (True positives) / (True positives + false positives)

Precision shares a numerator with the recall metric, but the denominators are different. In precision, the denominator is "what the Prompt Criteria predicts is relevant;" in recall, the denominator is "what is truly relevant."

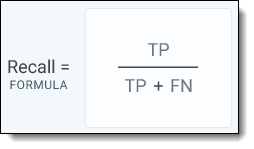

Recall

This is the percentage of truly relevant documents that were found using the current Prompt Criteria.

Recall = (True positives) / (True positives + false negatives)

Recall shares a numerator with the precision metric, but the denominators are different. In recall, the denominator is "what is truly relevant;" in precision, the denominator is "what the Prompt Criteria predicts is relevant."

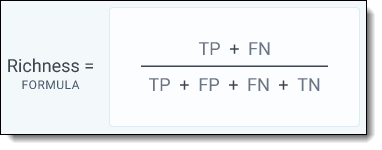

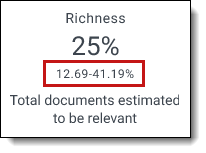

Richness

This is the percentage of relevant documents across the entire review.

Richness = (True positives + false negatives) / (True positives + false positives + false negatives + true negatives)

The richness calculation counts the final number of positive documents, then divides it by all groups except errored or skipped documents.

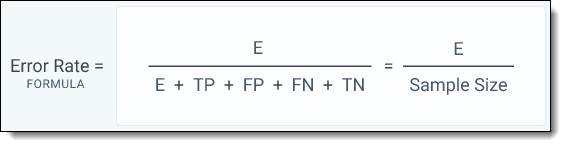

Error rate

This is the percentage of documents that could not be processed by aiR for Review and received a score of -1.

Error rate = (Errored documents) / (Errors + true positives + false positives + false negatives + true negatives)

This could also be written as:

Error rate = (Errored documents) / (Sample size)

The error rate counts all errors, then divides them by the total number of documents in the sample.

How the confidence interval works

When Review Center reports validation statistics, it includes a range underneath the main estimate. The main estimate is the "point estimate," meaning the value we estimated from the sample, and the range beneath it is the confidence interval.

Prompt Criteria validation uses a 95% confidence interval when reporting statistics. This means that 95% of the time, the range contains the true statistic for the entire document set.

This interval uses the Clopper-Pearson calculation method, which is statistically conservative. It is also asymmetrical, so the upper and lower limits of the range may be different distances from the point estimate. This is especially true when the point estimate is close to 100% or 0%. For example, if a validation sample shows 99% recall, there's lots of room for that to be an overestimate, but it cannot be an underestimate by more than one percentage point.

How Prompt Criteria validation differs from other validation types

aiR for Review Prompt Criteria validation queues have a few key differences from prioritized review validation queues in Review Center. The main metrics are the same, but some of the details vary.

In Prompt Criteria validation:

- The validation typically takes place early in the review process, instead of towards the end.

- Skipped documents are excluded from calculations entirely, instead of counting as an unwanted result.

- The validation sample is taken from all documents in the data set, regardless of whether they were previously coded. This leads to some slight differences in the metric calculations.