Last date modified: 2026-Feb-26

Analyzing aiR for Review results

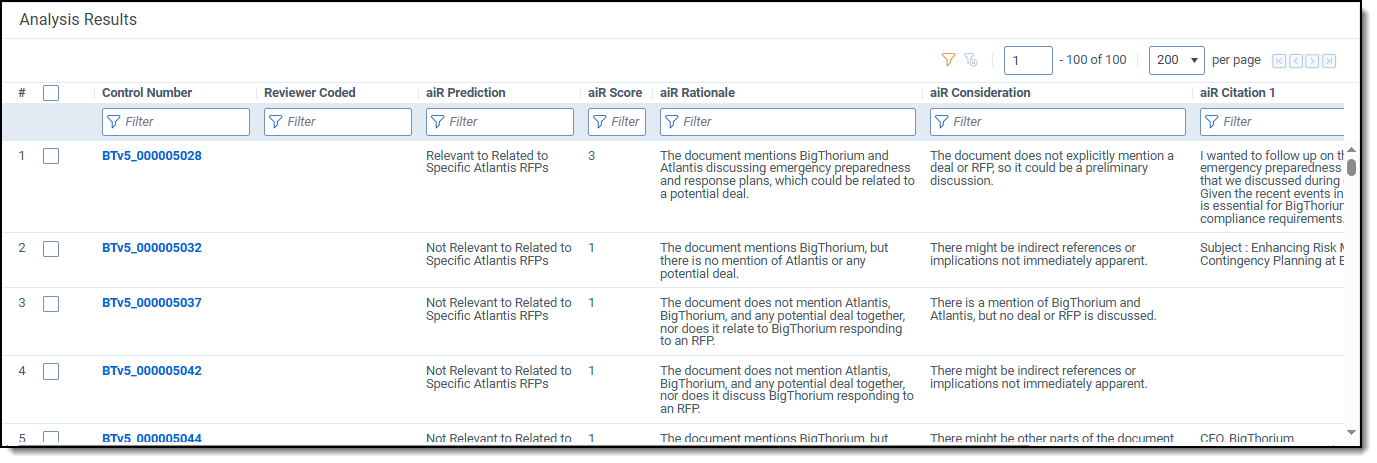

When aiR for Review analyzes documents, it makes predictions about the relevance of documents to different topics or issues. If it predicts that a document is relevant or relates to an issue, it includes a written justification of that prediction, as well as a counterargument and in-text citations. You can view these predictions, citations, and justifications either from the Viewer, or as fields on document lists.

How aiR for Review analysis results work

After analyzing a document, aiR for Review provides a Prediction, a Score with recommendations on how to categorize the document, and the supporting Rationale for prediction. This analysis has several parts:

- aiR Prediction—the relevance, key, or issue label that aiR predicts should apply to the document. See Predictions versus document coding

- aiR Score—a numerical score that indicates how strongly relevant the document is or how well it matches the predicted issue. See Understanding document scores.

- aiR Rationale—an explanation of why aiR chose this score and prediction.

- aiR Considerations—aiR is asked to argue against itself, identifying what assumptions it's making or additional facts that could reverse the prediction.

- aiR Citation [1-5]—excerpts from the document that support the prediction and rationale, with a maximum of five citations.

In general, citations are left empty for non-relevant documents and documents that don't match an issue. However, aiR occasionally provides a citation for low-scoring documents if it helps to clarify why it was marked non-relevant. For example, if aiR is searching for changes of venue, it might cite an email that ends with "Hang on, gotta run, more later" as worth noting, even though it does not consider this a true change of venue request.

You can use this information to help update and improve your prompt criteria.

Document summaries

On the Summaries tab of the document grid, aiR for Review provides a descriptive topic and a summary of each document. These are the same for each document across all projects. They are not affected by the project settings, prompt criteria, or other documents.

The generated columns are:

- aiR Topic—the descriptive topic aiR generated for this document.

- aiR Content Summary—the summary aiR generated for this document’s content.

These columns may populate either faster or slower than the analysis results. Summaries and topics cannot be generated without running one of the main analysis types.

Documents with an empty summary field

If the document has minimal content or unintelligible text, the aiR Topic will be “Poor Quality Content,” and it will not have an aiR Content Summary. Re-analyzing these documents will return a similar result.

If there is no content for either the aiR Topic or the aiR Content Summary, this means an error occurred when generating them. These errors are rare, and they do not affect whether the main document analysis succeeded. For more information, see How document errors are handled.

Predictions versus document coding

Even though aiR refers to the relevance, key, and issue fields during its analysis, it does not actually write to these fields. It is not coding the documents or writing to the coding fields. All of aiR's results are stored in aiR-specific fields, such as the Prediction field. This makes it easier to compare aiR's predictions to human coding while refining the prompt criteria.

If you have refined a set of prompt criteria to the point that you are comfortable adopting those predictions, you can copy those predictions to the coding fields using mass-tagging or other methods.

For ideas on how to integrate aiR for Review results into a larger review workflow, see Using aiR for Review with Review Center.

Variability of results

Due to the nature of large language models, output results may vary slightly from one run to another, even using the same inputs. aiR's scores may shift slightly, typically between adjacent levels, such as from 1-not relevant to 2-borderline. Significant changes, like moving from 4-very relevant to 1-not relevant, are rare.

Understanding document scores

aiR scores documents from 0 to 4 according to how relevant they are or how well they match an issue. The higher the number, the more relevant the document is predicted to be.

A score of -1 is assigned to any errored documents. They cannot receive a normal score because they were not properly analyzed.

Below are the aiR for Review scores:

| Score | Description |

|---|---|

| 4 | Very Relevant: The document is predicted very relevant to the issue. aiR found direct, strong evidence that the content relates to the case or issue. Citations show the relevant text. |

| 3 | Relevant: The document is predicted relevant to the issue. Citations show the relevant text. |

| 2 | Borderline Relevant: The document is predicted borderline relevant. aiR found some content that might relate to the case or issue. It usually has citations. |

| 1 | Not Relevant: The document is predicted not relevant. aiR did not find any evidence that it relates to the case or issue. |

| 0 | Junk: The document contains no useful information or is considered “junk” data, such as system files, an empty document, or sets of random characters. |

| -1 | Error: The document either encountered an error or could not be analyzed. For more information, see How document errors are handled. |

How document errors are handled

If aiR encounters a problem when analyzing a document, it will not return results for that document. Instead, it scores the document as -1 and returns an error message in the Error Details column. Your organization is not charged for any errored documents, and they do not count towards your organization's aiR for Review total document count.

The possible error messages are:

| Error message | Description | Retry? |

|---|---|---|

| Completion is not valid JSON | The LLM encountered an error. | Yes |

| Failed to parse completion | The large language model (LLM) encountered an error. | Yes |

| Document text is empty | The extracted text of the document was empty. | No |

| Document text is too long | The document's extracted text was too long to analyze. | No |

| Document text is too short | There was not enough extracted text to analyze in the document. | No |

| Model API error occurred | A communication error occurred between the large language model (LLM) and Relativity. This is usually a temporary problem. | Yes |

| Uncategorized error occurred | An unknown error occurred. | Yes |

| Ungrounded citations detected in completion | The results for this document have a chance of including an ungrounded citation. For more information, see Ungrounded citations. | Yes |

If the Retry? column says Yes, you may get better results trying to run that same document a second time. For errors that say No in that column, you will always receive an error running that specific document.

If you retry a document and keep receiving the same error, the document may have permanent problems that aiR for Review cannot process.

In rare cases, there may be an error generating the document's topic or summary. As long as the main document analysis succeeded, these are not considered errored documents. An error in the aiR Topic or aiR Content Summary field does not affect any of the other results.

You can re-run the document to try for better results, but the document will be charged normally.

Ungrounded citations

An ungrounded citation may occur for two reasons:

- When the aiR results citation cannot be found anywhere in the document text. This is usually caused by formatting issues. However, just in case the LLM is citing sentences without a source, we mark it as a possible ungrounded citation.

- When the aiR results citation comes from something other than the document itself, but which is still part of the full prompt. For example, it might cite text that was part of the prompt criteria instead of the document's extracted text.

When aiR receives the analysis results from the LLM, it checks all citations against the prompt text. Any possible ungrounded citations are marked as errors, and they receive a score of -1 instead of whatever score they were originally assigned. If retrying documents with these errors does not succeed, we recommend manually reviewing them instead.

Actual ungrounded citations are extremely rare. However, highly structured documents, such as Excel spreadsheets and .pdf forms, are more likely to confuse the detector and trigger these errors.