Last date modified: 2026-Feb-20

Data Analysis

Data Analysis is a combination of machine stages that identify PI, link PI to identified entities, normalize data, and prepare data for review and reporting.

There are two types of data, and when it comes to finding PI, Data Breach Response treats each one differently.

- Structured data—data that is organized in a specific and predefined way, typically in a table with columns and rows where each data point has a specific data type.

Examples of structured documents include databases, spreadsheets and CSV files. PI detection leverages the predictable layout of rows and columns. Data Breach Response identifies table boundaries, analyzes headers, and applies pre-built or custom detectors to specific fields.This structured format accurately detects PI types with high precision, while also enabling entity linking and normalization across consistent data points. - Unstructured data—unlabeled or otherwise unorganized data. The content on the files are usually composed of natural language.

In unstructured documents, such as emails or text files, PI detection relies on text-based analysis rather than fixed positions. Data Breach Response uses pattern-based and context-driven methods to locate PI within free-form text, applying rules like regular expressions and contextual cues to reduce false positives.

Run Data Analysis

To run Data Analysis:

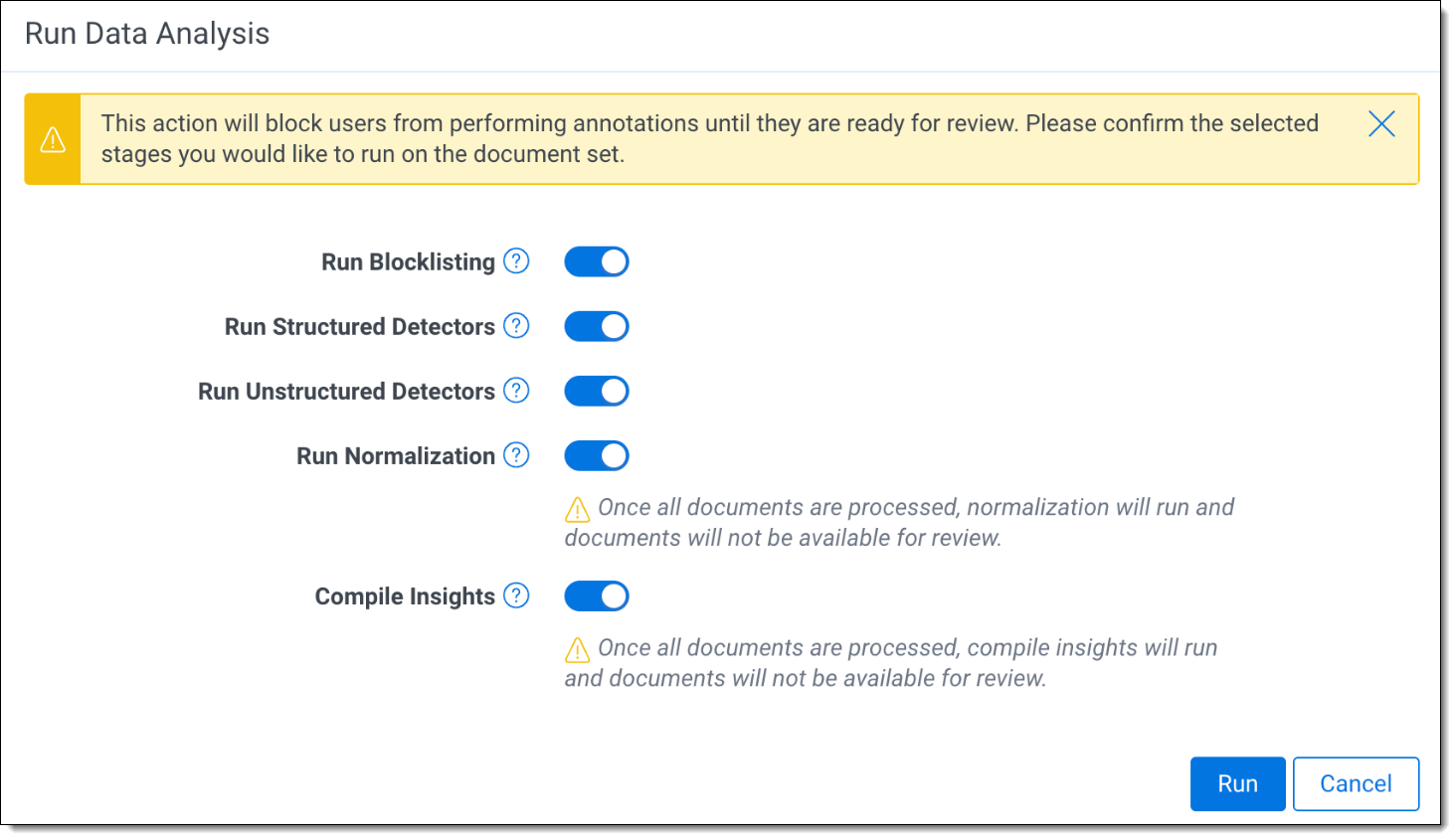

- Select Run Data Analysis in the console.

- Select the Data Analysis stages you want to run.

Review the information in Choose stages to run and Data Analysis stages for more details. - Click Run to start Data Analysis.

Choose stages to run

You can configure Data Analysis to run all, or some, stages. Depending on what the goal of running Data Analysis is, it may be helpful to only select some stages to run.

Common use cases when running Data Analysis include:

- Run Blocklisting

- Run Unstructured Detectors

- Run Structured Detectors

- Run Normalization

- Compile Insights

The initial Entity Centric Report is generated from spreadsheet entities only. Entities from unstructured documents will appear on the Entity Centric Report when they are linked in the document viewer.

QC review primarily focuses on refining detectors and potentially blocklisting false hits. At this stage, having an up-to-date Entity Centric Report is not the priority. For this reason and to reduce runtime, run the following stages only:

- Run Blocklisting

- Run Unstructured Detectors

- Run Structured Detectors

- Compile Insights

Just as in the the QC process, detectors may be refined during Review. You can choose to run the same steps in Case 2 if you wish to make detector or blocklist updates during Review.

If you wish to just generate updated versions of the Reviewer Progress and/or Document Report, run the following stage only:

- Compile Insights

During the deduplication process, entities may be merged, entities may be unmerged, or Deduplication Settings may be updated. It is not typical that detectors are updated at this stage.

If changes have been made to structured documents, for example adding or removing PI, since the entity report was last generated, include Run Structured Detectors. This stage is responsible for automatically linking names and PI in structured documents to create entities. Running Structured Detectors ensures those links are up to date for the entity report.

For more detailed information on each stage of Data Analysis, see Data Analysis stages.

Data Analysis stages

Following is a list of Data Analysis stages, descriptions, and use cases:

| Stage | Description | Can documents be reviewed while the stage is running? | When to run the stage |

|---|---|---|---|

| Run Blocklisting | The blocklist consists of terms added from the Blocklisting tool that will not be detected as PI. PI detectors will ignore new detections that match the blocklisted terms, and prior detections matching blocklisted terms will be marked as Blocklisted and have their links broken. Manually added detections that match terms in the blocklist will not be removed. If blocklisting is run when no changes have been made to the blocklist, the stage status will show as Skipped in Progress section. | No |

|

| Run Unstructured Detectors | Identifies PI by running all enabled PI detectors on unlocked unstructured documents. As soon as unstructured detectors have finished processing on a document, the document becomes available for review. | Yes |

|

| Run Structured Detectors | Identifies PI by running all enabled PI detectors on unlocked structured documents. In addition, all names and PI from structured documents are automatically linked. As soon as structured detectors have finished processing on a document, the document becomes available for review. | Yes |

|

| Run Normalization | Standardizes names and PI into consolidated entities and generates an updated entity report. | No |

|

| Compile Insights | Calculates and consolidates PI and entity statistics for reporting. | No |

|

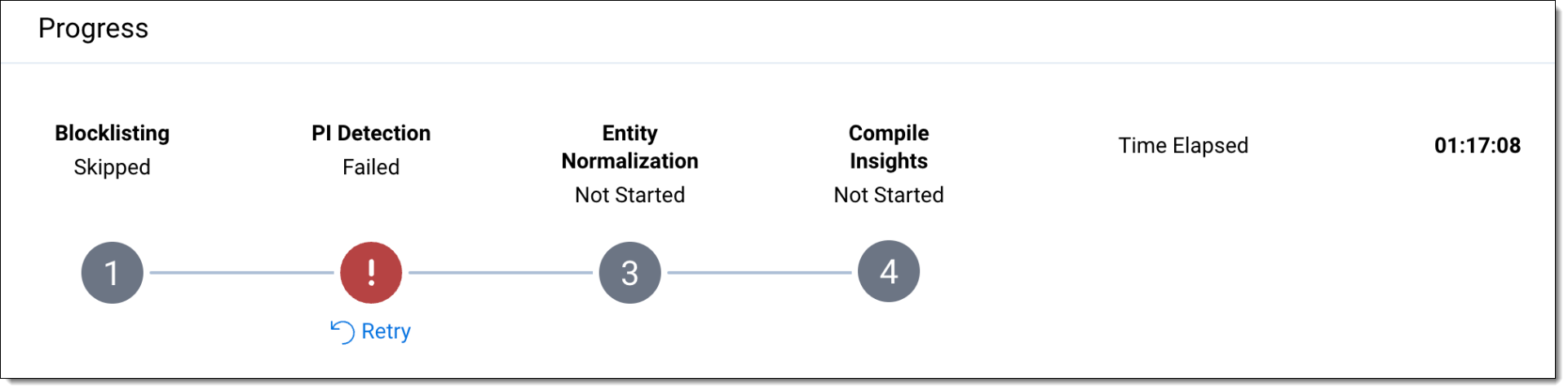

Monitor Data Analysis status

A run’s progress can be monitored on the Data Analysis page. Data Analysis breaks down each stage into sections that include dashboard summaries, sub-job details, and counts.

Overall progress

Overall progress can be monitored using the status shown in the Progress section:

- Not Started—the stage has not begun.

- Still Running—the stage is in the middle of processing.

- Completed— the stage has finished processing successfully.

- Completed with Failures—the stage has finished processing and some items encountered failures during processing.

- Failed—the stage has finished processing and many items encountered failures during processing, so the stage is considered to have failed.

- Skipped—the stage was not run.

- Interrupted—the stage was stopped in the middle of processing.

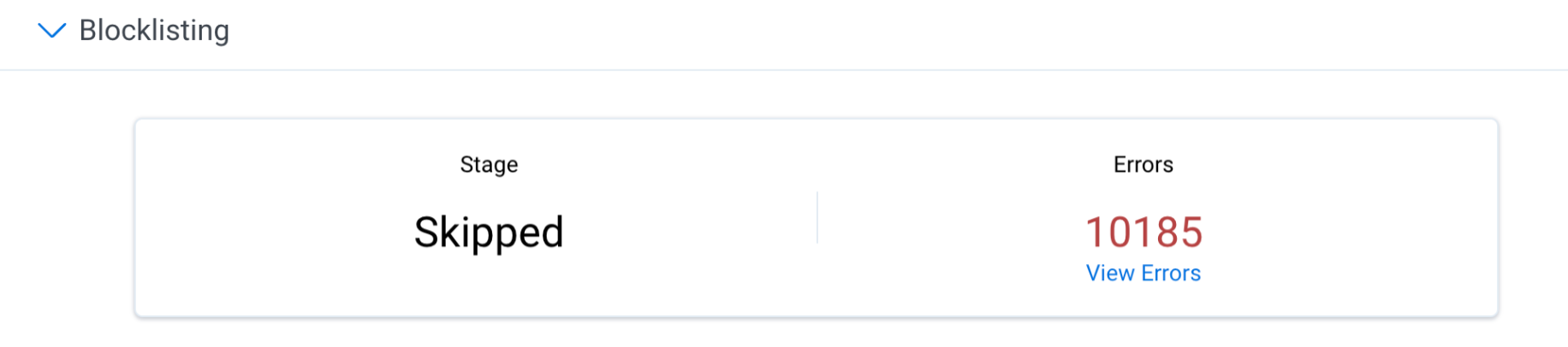

Blocklisting

The following details appear in the Blocklisting section:

- Stage—the current status of the stage.

- Errors—the number of errors that occurred during blocklisting. You can retry these errors, see Canceling and retrying Data Analysis for details.

If Blocklisting is run but there have been no changes to the blocklist, the status section displays a Skipped status.

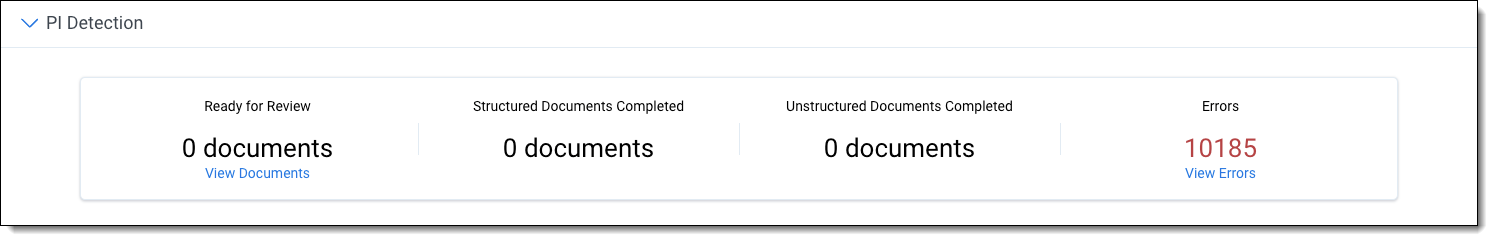

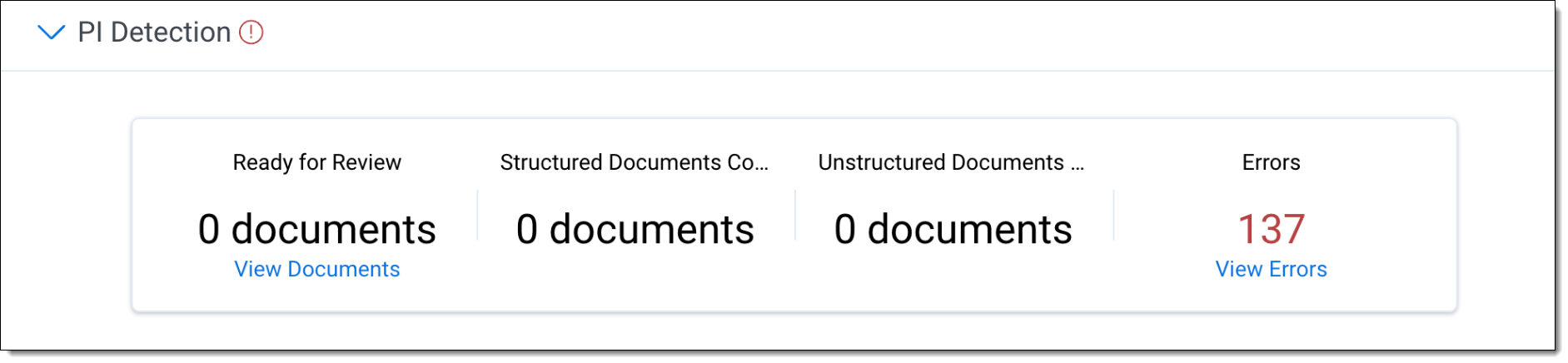

PI Detection

The following details appear in the PI Detection section:

- Ready for Review—the number of documents that have finished processing through the PI Detection stage and can start to be reviewed. View Documents will take you to the Project Dashboard to view these documents. See Data Analysis and document review for more information.

- Structured Documents Completed—the number of structured documents that have finished processing through the PI Detection stage. Structured and unstructured detection run in parallel.

- Unstructured Documents Completed—the number of unstructured documents that have finished processing through the PI Detection stage. Structured and unstructured detection run in parallel.

- Errors—the number of errors that occurred during PI Detection. View Errors will take you to the Project Dashboard to view these errors.

You can retry these errors, see Canceling and retrying Data Analysis for details.

When running Data Analysis, you can choose to run only unstructured or structured detection. If one is not run, it’s Documents Completed Count will remain zero. For example, if only unstructured detection is run, Structured Documents Completed will display zero documents as the detectors were not run.

Entity Normalization & Deduplication

The following details appear under Entity Normalization & Deduplication:

- Address Standardization—aligns addresses into a single address format or consistent address formats

- Normalizer—consolidates annotation links and records into entities. Merges records with the same PI.

- Stage—the substage currently being run

- Completion—percent completion of the stage

- Errors—the number of errors that occurred during Entity Normalization & Deduplication. View Errors will take you to the Project Dashboard to view these errors.

You can retry these errors. See Canceling and retrying Data Analysis for details.

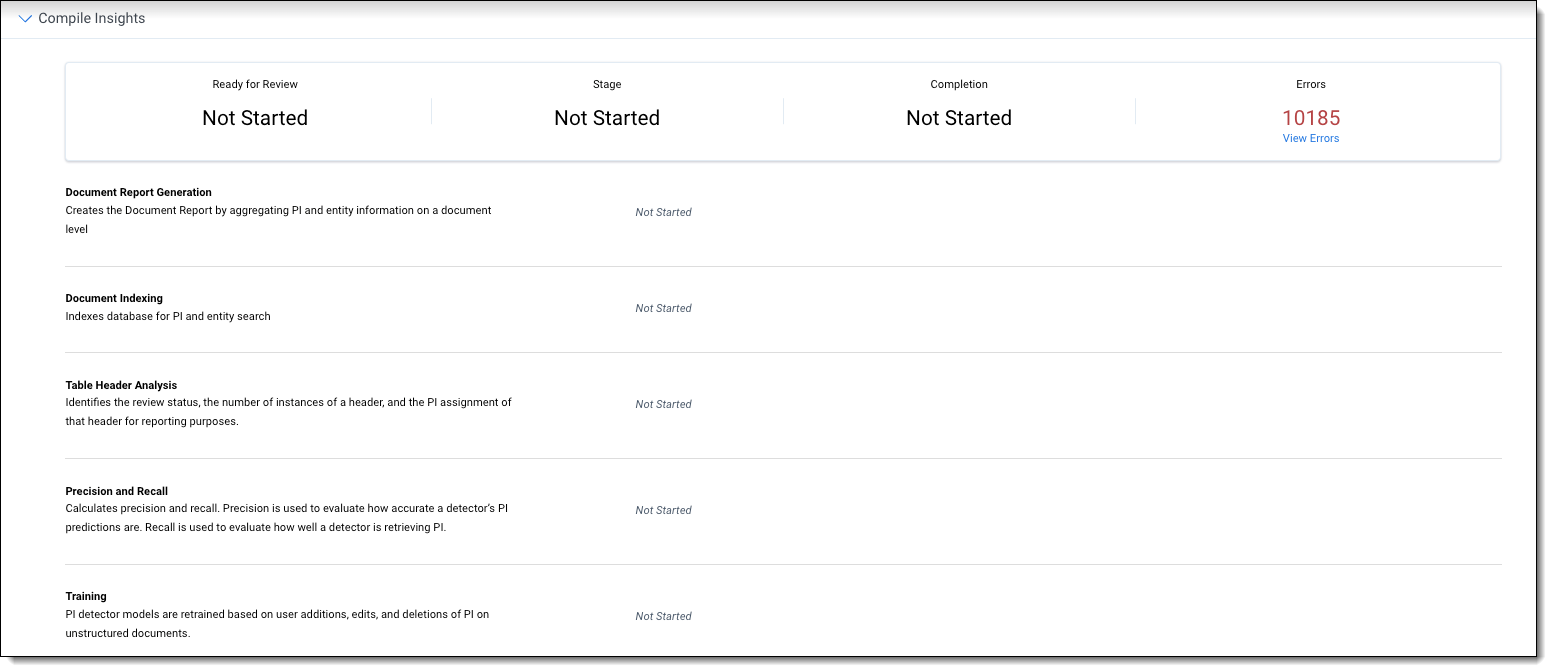

Compile Insights

The following details appear under Compile Insights:

- Document Report Generation—creates the Document Report by aggregating PI and entity information on a document level.

- Document Indexing—indexes the database for PI and entity search.

- Table Header Analysis—identifies the review status, the number of instances of a header, and the PI assignment of that header for reporting purposes.

- Precision and Recall—calculates precision and recall. Precision is used to evaluate how accurate a detector’s PI predictions are. Recall is used to evaluate how well a detector is retrieving PI.

- Training—PI detector models are retrained based on user additions, edits, and deletions of PI on unstructured documents.

- Stage—the substage currently being run

- Completion—percent completion of the stage

- Errors—the number of errors that occurred during Entity Normalization & Deduplication. View Errors will take you to the Project Dashboard to view these errors.

You can retry these errors, see Cancel and retry Data Analysis for details.

Data Analysis and document review

While Data Analysis is running, reviewers will not be able to add, edit, or delete entities or PI on documents. However, to reduce time to review, the Unstructured and Structured PI Detection stages follow a document streaming approach. This means that as individual documents finish the PI Detection stage they become available for review. Blocklisting, Entity Normalization & Deduplication, and Compile Insights do not follow this approach and all documents must finish processing before they become available for review, and this should be taken into consideration when selecting what stages to run.

All documents are assigned a Data Analysis Status to indicate it’s availability for review:

- Data Analysis run required—the initial status after ingestion. Indicates Data Analysis has not yet been run on the document.

-

Not ready for review—the document is currently being processed through Blocklisting, PI Detection, or Compile Insights. The status will change to Not ready for review when Data Analysis is run and reviewers will not be able to edit the document.

-

Running normalizer—the document is currently being processed through Entity Normalization & Deduplication. The status will change to Running normalizer when Entity Normalization & Deduplication is running and reviewers will not be able to edit the document.

- Ready for review—the document has finished processing through PI Detection and/or the Data Analysis run is complete. Reviewers are able to edit the document.

You can view a document’s Data Analysis Status on the Project Dashboard Document List. The field can be searched for using PI and Entity Search.

Cancel and retry Data Analysis

You can stop Data Analysis at any time while it is in progress. To stop it, select the Cancel Run button in the Project Actions console.

If a stage fails or Data Analysis is manually stopped, it can be restarted using the Retry button in the Progress card. Data Analysis will restart from the failed or interrupted stage when retrying.

To start a new run, select Run Data Analysis in the Project Actions console.

Document errors

The Errors field will indicate the number of documents that have errored or encountered an issue during that stage. Select View Errors or the View Errored Documents button in the console to view these documents and their specific errors on the Project Dashboard. For more information on how to address errors, see Document Flags.

Data Analysis history

Data Analysis, like other complex features in Relativity, provides the option in the View Run History modal for gathering audits of various runs.

The following information is available in View Run History:

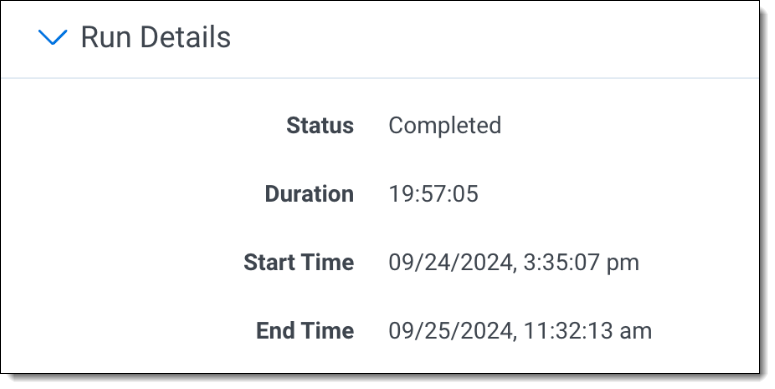

Run details

The following details appear in the Run Details section:

- Status—the status of the Data Analysis run

- Duration—the run time

- Start Time—the date and time the run was started

- End Time—the date and time the run ended

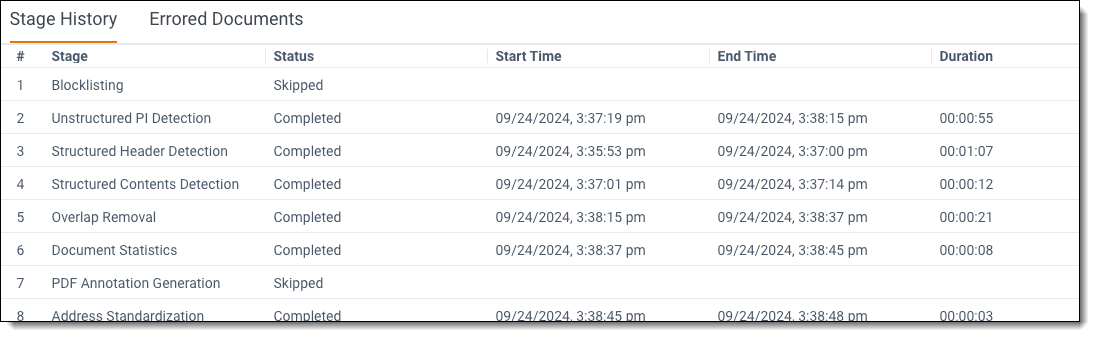

Stage history

The following details appear in the Stage history section:

- Stage—the name of the stage

- Status—the status of stage

- Start Time—the date and time the stage was started

- End Time—the date and time the stage ended

- Duration—the run time of the stage